Basics of Search Engine: How Search Engines Work

Introduction

I am Gopal marketing specialist. Let's delve into a new topic on the SEO module, focusing on how search engines operate. In this module, we will cover what a search engine is, the concepts of crawling and indexing, understanding crawl budgets, and optimizing your products. Now, let's start with a brief definition of a search engine.

What is a Search Engine?

A search engine is a software program designed to help people find information online using keywords or phrases. Examples of search engines like Google, microsoft bing and Yahoo, which you can use to locate specific information on the web. These top search engine organize information about web pages in a structured format.

Now, let's understand how search engines work when you input a particular query. We will explore the backend activities that occur within the search engine. There are three main components of a search engine: the query engine, the crawler, and the indexer.

Components of a Search Engine

- Query Engine: Processes user queries.

- Crawler: Reads information from websites.

- Indexer: Indexes and stores crawled information.

Crawling

Crawling involves reading content on web pages and storing that information in a database. For instance, if you have a website with various types of information such as text, images, and videos, a web crawler or spider will visit your site, extract the information, and save it in a database.

Think about your website. Consider your website. A web crawler or spider visits your site or a specific seed URL.

It then scans all the information on the internet and saves it in a database. If there are links on your webpage, the crawler follows them, visiting linked pages and extracting information. This recursive process continues.

The crawler operates based on certain policies.

Crawl Policies:

- Selection Policy: There is a selection policy that determines. It follows policies to decide which pages to visit, which to skip, and which pages the crawler should download.

- Revisit Policy: Revisit policy entails the crawler scheduling the time when it should revisit the workspace and incorporate any changes into its database. For example, if a web crawler visits your website, reads all the information, and after a few days, you make changes to your web page, Google's crawler will revisit your website, crawl the recommended changes, and store those changes in its database.

- Parallelization Policy: In this policy, crawlers employ multiple processes simultaneously to explore links, known as distributed crawling. Suppose this is your website A, and its link is mentioned on another website B. The crawler reads your website content on website B, finds the link, and then visits your site using that link, repeating the process with multiple sources. This is known as multiple processes and distributed crawling.

- Politeness Policy: Includes In the case of politeness, we have a term called "crawl delay." Crawl-delay refers to the pause of a few milliseconds that Google crawlers take after downloading information from a website. This delay is implemented to ensure that the crawler waits for a short period after downloading data from the website.

Now, let's explore how crawling works. Imagine Google as your search engine.

When someone enters a query, the web spider or crawler goes to your website, reads every web page, crawls your site, copies the information, and stores it in the database. When a user queries, the search engine retrieves and displays results from this database. This is the process of how the crawler operates.

Indexing

After crawling, we move to indexing. As the Google or search engine crawler gathers information from your website, the next step is indexing. Indexing is akin to creating an index for a book. If you want to find a specific topic, you can start from the search page or consult the index, locate the corresponding page number, and find the information you seek.

Okay, this is the easiest step. Simply look at your index of books and visit that particular page. The same thing happens in Google, in your search engine database where we have a lot of information. Just fetching your user's query from that particular database is really difficult.

Therefore, search engines do indexing based on keywords. When someone searches for a particular query, the search engine checks for relevant keywords and that query or information is reflected to the user.

After the search engine crawls all over the internet, it creates an index of all the web pages it finds.

Several factors contribute to creating an efficient indexing system:

- Storage information used by the indexer

- The size of the indexer

- The ability to quickly find documents containing the searched keyword.

These factors determine the efficiency and reliability of an index.

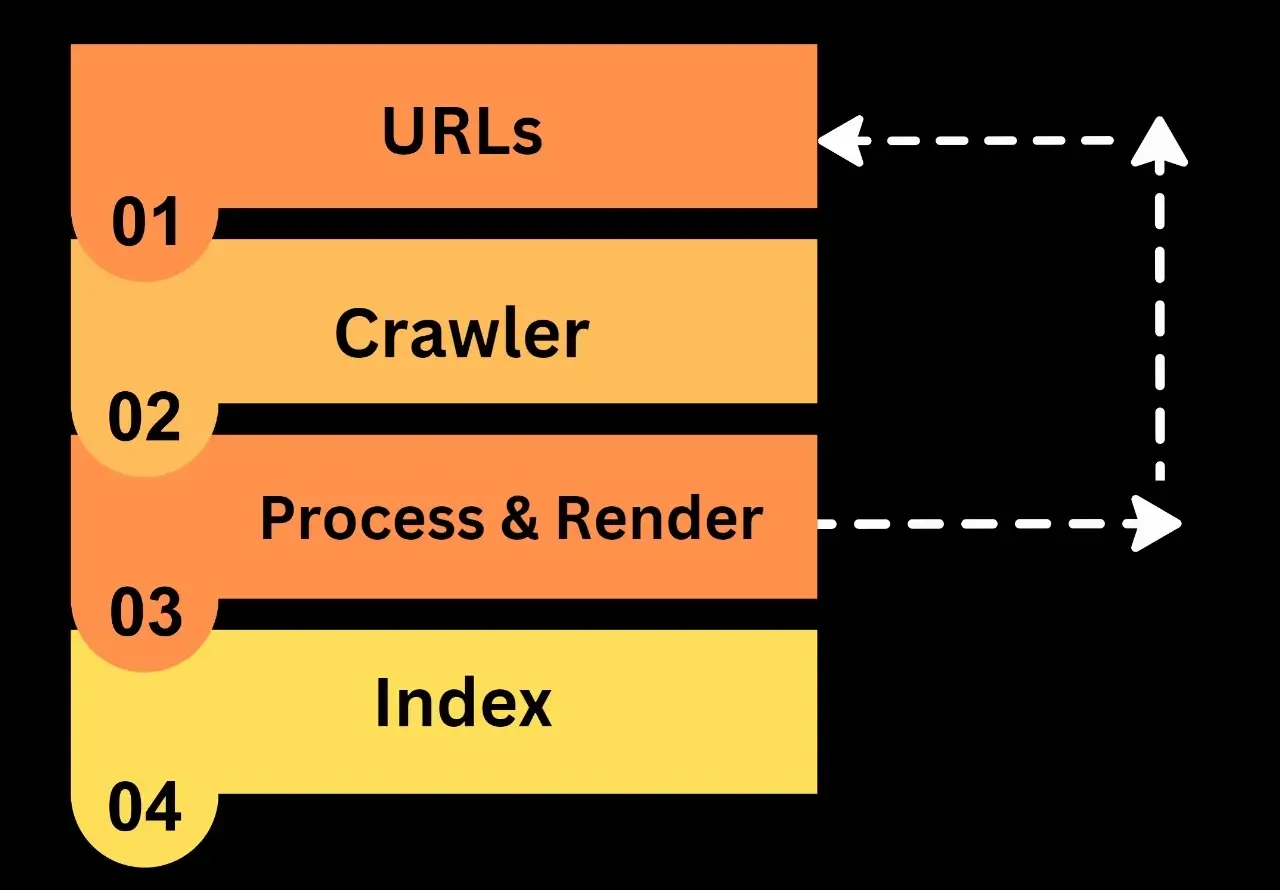

Here's how indexing happens: a user makes a search query, and the query is passed with the help of the query engine. HTML pages or web pages of your website are the pages involved.

The indexer gets keywords from your web pages and stores them in the form of indexing in its index file or repository. When a user puts any query, the query engine looks in the index file, gets a list of matching pages, and shows the result page to the users then relevant information is visible to the users.

Types of Indexing:

There are two types of indexing: forward index and backward index.

- Forward Index: Stores all keywords in a document.

- Backward Index: Stores and converts forward indexes, grouping documents containing specific keywords.

In simple language forward index stores all keywords and the backward index makes groups of those keywords and stores them in relevant keyword groups.

How Search Engines Work

how does the search engine work? When a user puts any query, the ranking algorithm retrieves the query from the database. The web spider crawls every single piece of information from the web page, indexes it, stores it in the database, fetches data from the index file and sends it to the user.

This is how a search engine works. As per me google has a lot of search history of a user so it will show relevant results as per the public analysis.

This information will assist website owners in evaluating their search engine rankings, fostering a comprehensive understanding of how ranking factors operate. It aims to facilitate the analysis of results based on these factors.

Crawl Budget

The crawl budget is the number of times a search engine spider crawls your website in a given time. It's essentially how often the web spider visits your website. To optimize your crawl budget and rank on the search engine's first page, strategies need to be implemented for SEO. And you just need to know all the processes involved in SEO. These strategies include avoiding rich media files, optimizing internal and external links, using social channels, and making the website more search engine-friendly.

In this blog, we covered how search engines work. Next, we'll delve deeper into SEO.