Table of Contents

ToggleRecently, Google confirmed that it only accepts four specific fields in robots.txt files in an update to its Search Central manual. The Google bots are going to ignore any unsupported fields. The purpose of this update is to help website owners and developers avoid using directives that won’t be recognized by giving them clear instructions.

Key Update

It is clear from Google’s literature that fields not specifically mentioned in robots.txt files are not supported by its crawlers. By performing this, confusion decreases and website owners are guaranteed to employ only supported directives.

“We often get asked about fields that aren’t specifically mentioned as supported, and we want to make it clear that they are not.”

It is expected that this clarification would stop websites from depending on unsupported directives that have no impact on Google’s crawling of their pages.

What Does This Mean for You?

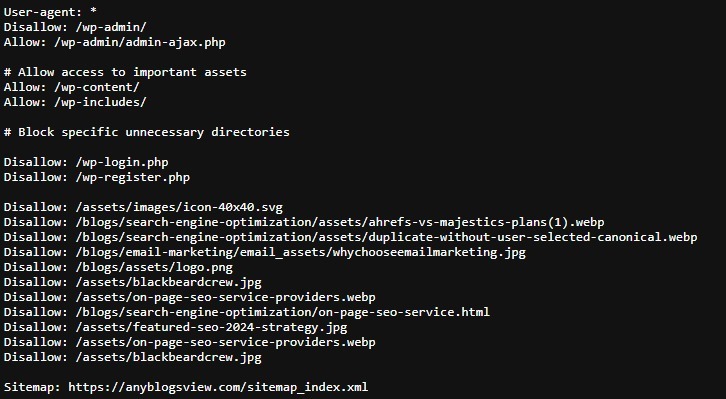

Make sure that only fields that are supported are being used by checking your robots.txt files if you are in control of a website. Using unsupported fields, such as third-party or custom directives, will have no impact on how Google crawls your site.

The most important fields that Google officially supports are the following:

- user-agent

- allow

- disallow

- sitemap

Any other directives, such as the commonly used “crawl-delay,” are not supported by Google, though other search engines may still recognize them. It’s also crucial to remember that Google is constantly discontinuing support for the noarchive directive.

Moving Forward

This update provides a helpful reminder to keep up with Google’s official guidelines and recommended practices. It highlights how important it is to use features that are supported and specified in robots.txt files rather than assuming that fields that are not supported might function.